Atomic I/O letters column #114

Originally published 2010, in Atomic: Maximum Power ComputingReprinted here March 7, 2011 Last modified 16-Jan-2015.

The perfectly obvious "_" error

I've got 3 hard drives in my computer, and one of them's going bad. Well, I think it is - not spinning up on startup, and/or not spinning up after Windows puts it to sleep. Result: frozen computer. Whatever the problem is, it's turned into a BIGGER problem now; I'm typing this on my netbook because my (Windows 7 Ultimate) PC refuses to boot AT ALL any more.

When I turn the PC on, it gives the normal no-errors single POST beep, and carries on until when Windows ought to start loading... and then it stops, at a black screen with a flashing grey cursor line in the top left corner.

I've been plugging and unplugging drives for a while now - booting from just C: with neither of the other drives plugged in (which worked), booting with only one of them plugged in, etc - trying to figure out which one had the problem (tried different plugs from the PSU and different SATA cables, too, to no effect). Now I've got C: and the non-broken other drive connected, and all I get is the black screen with cursor. I'm afraid I've static-zapped C:, or bumped a head assembly out of alignment, or something.

Do YOU know what I've done?

Hamish

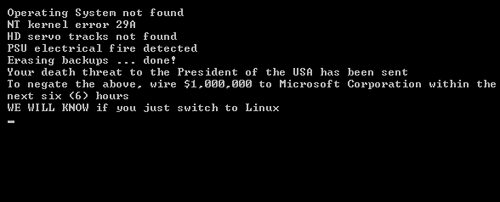

All I'm saying is, it could be worse.

Answer:

Yes, I reckon I do.

The problem you have now is probably not associated with the dodgy drive. And this new problem, at least, is probably easy to fix.

What you've done, in plugging and unplugging drives and starting the system with different combinations of them, is change the order of your motherboard's drive list. So when you've got anything more than your boot drive plugged in, it's one of the other drives that the computer's trying to boot from. Since neither of those drives has an operating system on it, you get The World's Least Informative Error, that stupid black screen with the flashing cursor.

Go into BIOS setup (usually by pressing delete shortly after the POST beep), navigate to the drive-list, and rearrange it so that your actual C drive is the first one. (This can be trickier than you'd think, if all of the drives are the same make and model and you have to identify them by which SATA port they're plugged into. Or just rearrange them blindly until you get it right; with only two drives, this of course only requires one rearrangement.)

Next, go to the actual boot-order screen, and make sure "hard disk", or the name of the drive you've put at the top of the drive-order list, is the first boot device, or second after the optical drive if you want the option of booting from CD/DVD. When your Windows drive is back at the top of the list, your computer should be no more broken than it was when you started. Which is more than can be said for a lot of situations like this.

(Different BIOSes do this stuff differently. You may find the boot-order and the drive-order all on the one setup page, for instance.)

Brainless NICs

On this page, you write "USB network adapters are, by the way, likely to use more CPU power than even a basic PCI adapter, let alone a proper brand name server-quality NIC."

I do not know why basic and server-quality NICs would not be the same in CPU utilization, and I would like to know. Can you point me to any information?

P

Answer:

This is the same deal as with many other pieces of hardware where some amount of the processing work has been moved

from expensive logic in the device itself to the computer's CPU, via cheap driver software.

Modems,

printers, audio adapters and of

course graphics adapters have all done this, though even entry-level modern graphics cards commonly have a lot of

onboard acceleration hardware.

In the case of network cards, you've got "server-class" cards that, for instance, have a full TCP stack in hardware - a "TCP Offload Engine".

"Consumer-class" cards, in contrast, are quite dumb, and make the driver do most of the work. USB network adapters have even more software load, because of the added overhead of the USB interface by itself.

In pretty much all of these cases, though, the difference is no longer important for the vast majority of users, including "enterprise" applications. (That article of mine about USB doodads is from 2004.)

People hated the old software-based "Winmodems" because they only worked on Windows (their heavyweight drivers just hadn't been made for other OSes), and they were often buggy, and they imposed a significant CPU-usage load on the relatively slow computers of the time.

Today, doing full Ethernet - even gigabit Ethernet - largely in software will have a readily measurable effect on performance if you're redlining the network bandwidth, but that effect is not one that the user is likely to actually notice.

There are, for instance, those Bigfoot network cards specifically optimised to improve multiplayer game performance. But the improvement compared with a normal cheap PCI network card, even when the Bigfoot Killer NICs were brand new a few years ago, was very small, unless the test machine really had no CPU time to spare at all. The difference between a Killer NIC and a server-class Intel NIC pretty much fades into the noise.

(The purchase-price difference can be significant, though - unglamorous server hardware like high-class network cards is commonly available cheap on eBay. Exotic "enthusiast" PC hardware like the Killer NICs tends to hold its value irrationally well.)

Now that very fast multi-core CPUs are standard equipment for even low-end computers, you can do all sorts of outrageous crap in software without unacceptable performance penalties. RAID, emulation of other platforms, virtualisation, HD video playback, etc.

That said, if you're building a PC to do some custom network-appliance job that requires umpteen NICs that all need to deliver some large fraction of their rated throughput all of the time, you could very well need "proper" network cards to get the job done. If it were me then I'd originally build the system with a bunch of cheap PCI/PCIe-x1 NICs and see if that was good enough, but other people's mileage, and tolerance for possible problems, may of course vary.

DIY goop

Toothpaste is an oldie but a goodie as a temporary thermal-paste solution.

What about Selleys Araldite (TM) as a fairly permanent solution?

Lots of (ahem) old-timers swear by it.

Rocky

Answer:

Just about anything will work as a thermal interface

material, if you don't ask too much of it. If the mating surfaces are very flat and firmly clamped together, and

if you don't need to move a huge amount of heat through the junction, then any randomly-chosen gooey substance

that won't evaporate when heated for long periods of time will be adequate. This includes epoxy glue, but also dozens

of other hardware-store substances, from axle grease to silicone sealant.

Most of those substances, definitely including epoxy, are quite lousy conductors of heat in absolute terms. If you apply them in the proper thermal-grease way - a very, very, translucently-thin layer, when you're connecting very flat surfaces - then they'll pretty much only fill gaps that would otherwise be filled by very non-conductive air, and the net result will be a small but worthwhile improvement.

If one or both of the surfaces are a bit uneven, though, a lot more of the heat load will have to move through your thermal compound, and low-conductivity compounds will become a problem. This situation is still very possible, even for modern CPUs and CPU coolers; modern mainstream CPUs all have large heat-spreaders with rather less than mirror-smooth surfaces, and many heat-sinks are a bit rough, too.

Too much goop between flat surfaces is bad, because it prevents direct contact. Too little goop between uneven surfaces is bad, because it leaves air spaces. (There are also now CPU coolers that have very flat bases with gaps in them, because the base is made out of a parallel array of heat-pipes, or something.)

Also, the more energy you need to move through the junction, the more critical the goop becomes. All sorts of unlikely substances will work to connect a low-power netbook-type CPU to a full-sized heat-sink, but things change if you're cooling a high-end multi-core chip, especially if it's overclocked.

Even in that situation, the actual wattage transferred isn't that amazing by normal engineering standards. But the maximum allowable temperature of a CPU is low enough to make thermal-transfer performance quite important. If you're cooling a few big fat separate audio-frequency transistors in an amplifier then you can connect them to the heat-sink via washers made from (relatively) thick mica or silicone rubber, and be fine. If you're cooling a billion tiny fussy multi-gigahertz CPU transistors, though...

(Heat output of modern CPUs can now be way up in the hundreds of thousands of watts per square metre; fortunately, the die is only a few hundred square millimetres in size.)

This said, you can whip up your own thermal goop by taking any ordinary light oil or glue and adding very conductive stuff to it, like super-fine aluminium powder. Metal-filled epoxy - the big brand of which is that famous fixer of fuel tanks, engine blocks and B-52 wings, J-B Weld - makes pretty good thermal adhesive all by itself, provided you're happy for that CPU and that heat-sink to remain mated for as long as they both shall live. (If you mix the epoxy with regular heat-sink grease, you can make a weaker glue that allows disassembly.)

It's also now easy to get silicone-based thermal goop which is thin enough that most excess will slowly squeeze out the edges of the contact patch, so you can apply it a bit too heavily and let it find its own level. This is usually more than good enough for even high-end overclocked CPUs, provided case ventilation is also good and the ambient temperature isn't outrageously high.

If you're using thicker goop, or are just a perfectionist, you can get the compound-layer right by trial and error. Goop up the top of the CPU, install the cooler, uninstall it again, and see what the contact-patch on heat-sink and CPU now looks like. If it's a fine web of goop with plenty of almost-clean metal showing, then unless the heat-sink's got a very rough surface, you've probably used the right amount of goop. If you see no metal then there's too much goop. If there are actual dry patches, then unless the mating surfaces are ultra-flat mirrors, you need more goop.

Don't spend too long admiring your handiwork, though. One human hair or flake of dandruff can wedge the CPU and cooler far enough apart to significantly reduce heat transfer!

Benchmark Reviews' eighty-way thermal-goop comparison goes over a variety of different goop application techniques for different kinds of heat-sink.