Apparently, I'm upgrading.

Review date: January 14, 2006 Last modified 03-Dec-2011.

So, there was this lightning storm. Very impressive.

All of my various computers shut down just fine, when the lights went out and the UPSes started beeping and the cats couldn't figure out what the heck was going on.

My main computer, though, didn't power back up again.

I don't think it got fried by a power spike - it was plugged into one of the good UPSes, not just one of the good value ones. But it hadn't been turned off for, oh, a few months, and it just didn't want to start up again. It POSTed, it almost finished printing "Memory Test:" on the screen, then it hung.

As I turned it off and on and off and on while swapping RAM around to see if that actually had something to do with the problem, it got worse. Eventually, it didn't POST at all. Perhaps the rings were very tight.

Now, if an old voodoo lady cursed me in such a way that I was without my main computer and couldn't get a replacement, I could keep on getting stuff done. This place is rotten with other computers, and I've got offline and online backups of everything, most of which I can find.

And the non-starting machine was clearly not completely toast. I don't know what part of it's actually become an ex-component, but a bit of easter egging would no doubt have it back on its little plastic feet quickly enough. I wouldn't even need to get new parts; I've got spare everythings around here, though it would sting a little if I had to switch from 3.4GHz of Pentium 4 to 1.6GHz of emergency standby old-model slow-as-a-wet-week Socket 478 Celeron.

But, y'know, screw that. Every problem is an opportunity in disguise, after all.

It was time to upgrade.

Fast.

Like, a new working computer, in my office, in about 12 hours, tops.

So I placed an order with m'verygoodfriends at Aus PC Market. If you buy a computer worth of gear from them, they'll assemble it for free, so that's what I told 'em to do. The only difference between the service they gave me and the service they'd give any random Australian punter (they don't ship outside Australia and New Zealand) is that I got a phone call telling me when the box was together, indicating that it was time for me to drive downhill for a while to collect it.

(Usually, Aus PC Market post or courier stuff out to their customers; they're not a normal retail store. You can come and pick stuff up if you're in a big hurry, but that doesn't mean you'll get a discount on their regular delivery-included prices. Then again, many mainly-mail-order stores charge extra if you dare invade their inner sanctum with your uncouth consumerish ways.)

Aaaanyway, the assembly service was lovely. All the gruntwork magically done, including fiddly stuff like plugging in front panel USB port leads.

But it created a mild problem with this review, because unlike my other occasional whole-computer pieces, I didn't have a chance to photograph the components separately.

Given that y'all have probably seen pictures of motherboards, hard drives, RAM modules, flux capacitors and frayed ropes passing over big wooden pulleys on previous occasions, I don't feel a great urge to apply the pry-bar to this box to separate everything just for the sake of pretty pictures.

So you'll get shots of the machine together and working, and you'll be grateful. Back in my day we had to walk uphill both ways in the methane snow just to see a 320 by 240 mono video grab of a 6502 covered in mud, don't you know.

On with the show.

The brain

This was my excuse to get a dual core machine, so I did.

Dual core is suddenly the In Thing, not least because of Intel's recently introduced "Yonah" Core Duo processors, which seem to be powering about nine out of every ten new laptops. Not to mention Apple's new MacBook Pro and revised iMac.

The "Core", um, core, is very much the same sort of thing as the Pentium M that came before it. Lower clock speed than the P4, more work per clock cycle, low power consumption, and thus generally a Good Thing. And both Intel and AMD are also currently selling dual core versions of their mainstream desktop CPUs, and have been for long enough that there's no stupid price premium on them.

For most users, though, dual core isn't important. If you're eyeing a Core Duo laptop, hang about a bit and see if a cheaper single core version (which will be called, of course, "Core Solo"...) turns up.

This all started with Intel's "Hyper-Threading", which made a single-core CPU look like two processors, and was almost always worth having, but which certainly didn't give you a real two CPU system. The speed gain from HT (well, from versions of HT after the first one, anyway) is well worth the minor compatibility problems that dual CPUs create, but it isn't worth buying a new CPU for by itself.

Dual core processors give you a genuine honest-to-goodness dual CPU computer, with two real separate cores with their own real separate cache memory and their own adequately (for PC purposes) wide paths to the rest of the system. It's just all packed into one CPU socket.

Modern Windows flavours will take advantage of a couple of CPUs automatically, noticing when one chip's working hard and unloading tasks onto the other. But for most people's purposes, this doesn't matter. It's pretty easy to load up a desktop PC with enough tasks that it'll do things faster if it's got another CPU, but most people never actually do that for more than a few seconds at a time. Usually, when a user of a current Windows PC is twiddling their thumbs and waiting for something to happen, they're waiting for the hard drive, not the CPU(s).

And modern CPUs are fast enough that even when you do manage to set up a real dual-CPU-loving situation, the tasks would probably be done more than quickly enough for most people by a single CPU anyway.

Now that dual core's becoming normal - it'll be the only option for mainstream users, in due course - there'll be more and more multithreaded software that actually makes use of two CPUs even when you've only got that one CPU-intensive application running. At the moment, though, most software's single-CPU only, so you have to have multiple programs working hard at once.

And, just to make things even easier, it's difficult to figure out exactly how fast a given CPU runs, these days. AMD has been using abstract numbers to describe their CPU speed for ages now, because their chips do more work per clock tick than Intel's. AMD were losing sales, back in the earlier Athlon days, because consumers just compared clock speed and assumed a P-III had to be faster, when it actually wasn't.

Now Intel are doing the same thing, only more so. They're giving all of their current processors three digit ID codes that mean... well, pretty much nothing. The AMD numbers have only a tenuous connection to real world performance (they've always insisted that they weren't meant to be a yardstick for comparing Athlons with Pentiums, even though they knew perfectly well that that was how they were being used), but the Intel numbers are even less informative.

Anyway, the highest-numbered AMD chip at the moment that anybody vaguely sensible is going to buy is the "X2 4800+".

(I'm ignoring the "FX" series chips that're only marginally faster than their technologically extremely similar siblings, but cost far more. I'd also be ignoring brand new overpriced can-you-even-really-buy-one releases, but the only one of those that AMD has at the moment is another darn FX.)

The 4800+ is a dual core chip with one megabyte of level 2 cache for each core (most, but not all, slower X2s have 512Kb of L2 cache per core; the difference isn't trivial, but also isn't important for most users), clocked at 2.4GHz.

The 4800+ sells for $AU1320, delivered, here in Australia at the moment.

That's a lot.

The highest-numbered single core Athlon is the 4000+. One core, 1Mb cache, 2.4GHz. Half of an X2 4800+, in other words, and selling for $AU544.50.

Nobody gets twice the performance out of a dual CPU PC.

It's possible, in really CPU-heavy and highly multithreadable tasks, to get something approaching twice the speed by going from one to two CPUs (going from two to four gives a less drastic improvement; my thanks go to Tom's Hardware for providing another much-needed point on the CPUs/performance graph). But about 1.9 times is as much as you'll ever see when going from one to two CPUs, and that's for tasks like pro 3D and video effects rendering. For other tasks, a 1.7 times improvement is a very good score.

For everyday work, you're unlikely to get better than a 1.5 times improvement, and a lot of tasks - including most everyday desktop work and some heavy-duty stuff like certain kinds of scientific computing - won't get faster at all, because they just can't be split up into multiple threads.

A couple of CPUs can still make your computer more responsive while it's working hard on something else, but it doesn't take much CPU horsepower to load a Web page or check your e-mail while some big job completes. The incremental load isn't actually that large, and if the big job plays nice with CPU utilisation, you'll still be OK with one CPU. As, indeed, most people are.

If your primary use for monster CPU power is playing games, you don't need dual core at all. Yes, various games can, technically, benefit from two CPUs these days, but unless you've decided to fire up Battlefield 2 while you wait for your DivX DVD rip to encode, or something, then that difference will be extremely unimpressive.

If you're doing things that only need one CPU for pretty much optimal performance, you can (unsurprisingly) get

the same speed for a lot less money if you go for single core. The very fastest - at stock speed - Intel and AMD cores

still come in their single-core models, and cheapskates canny shoppers can just pick up something

like a nice Venice-core Athlon 64

3500+ for seven-tenths of the price of an X2 3800+, wind it up just as far or further, and enjoy

FX-YOUHAVETOOMUCHMONEY

performance while saving enough to buy a perfectly

good, or

highly questionable

but

cool, used car.

The dual core P4s also don't offer the fastest CPU bus speed - although the actual performance difference that the maximum single core 1066MHz bus offers over the maximum dual core 800MHz isn't, actually, a big deal. Bus speed has always been just icing on the core speed cake for the vast majority of desktop PC tasks, unless there's some problem with CPU and RAM speed mismatches, which there isn't here.

The reason I haven't been rambling on about the P4s yet is that, at the moment, Athlons are better value.

Intel's competition for the X2 Athlons are the dual-core P4s, known as the "P4 Ds". There's the "820" (see what I mean about incomprehensible model names?) at 2.8GHz, the 830 at 3GHz, and the 840 at 3.2GHz.

An Athlon 64 X2 3800+ will beat a Pentium D 830 quite consistently, though not by a wide enough margin that you'd be likely to notice if someone magically swapped your CPU and motherboard.

But, coincidentally, Aus PC Market are currently selling the D 830 and the X2 3800+ for the same price - $AU528 delivered.

Motherboard prices fuzz things up a bit, but not by enough to matter. If all you care about is stock-speed dual core computing, the X2's a better buy.

If you want a single-core stock-speed machine, you can pretty much go either way, especially if you're sensible and shopping at the lower end of the current CPU models (because, as always, faster chips cost more per megahertz, and the speed increments really aren't that impressive).

But I wanted dual core. And I also wanted to overclock.

Overclocking an Athlon X2 Whatever is not difficult. You take your cheap(ish) X2 3800+, you put it on pretty much any motherboard that does not cause the Counter-Strike kids at the local Net cafe to point and laugh, and you use the BIOS setup program to wind the CPU bus speed up from its default 200MHz to, say, 240MHz. The 3800+'s multiplier can be changed, but it starts out at 10X, which is as fast as it can go - so you can only overclock by winding up the FSB. Which isn't really an FSB any more for modern CPUs, and certainly not for A64s with their onboard memory controller, but which serves the same purpose from the overclocking point of view.

The FSB-only overclocking is not a problem, because all half-decent Socket 939 motherboards let you reduce the HyperTransport multiplier (so the CPU doesn't try to talk to the rest of the system too quickly) from the default 5X to, say, 4X. They also let you lock the peripheral bus speeds to their default figures, so you don't end up with the time-honoured my-hard-drive-is-trying-to-run-at-147MHz-and-freaking-out situation.

Aaaanyway, after doing all this, my 3800+ was running perfectly happily at 2400MHz, giving it the same performance as an X2 4600+. The chopped HyperTransport multiplier marginally slows the system, but since a 4600+ costs almost twice as much as a 3800+, I still count this as a complete success.

And there's some more headroom in this chip. I didn't even have to increase the core voltage to get this 20% overclock. The thing's still running its stock AMD CPU cooler, for Pete's sake.

The X2 3800+, like the X2 4200+ and 4600+, uses the "Manchester" core, which is newer and cooler than the older Toledo core used in the other, older X2s. The reason for this isn't very profound - the Manchester's just got half of the Toledo's 1024Kb of L2 cache per core (actually, I think the transistors are there, but permanently disabled; Manchester chips may or may not have failed quality assurance as Toledos). That cuts out a lot of transistors, and a bit of heat, keeping the Thermal Design Power for the chip below 90 watts.

The smaller cache, as seen on various "budget" chips in the past, may or may not make the Manchester more overclockable than the Toledo, depending on who you ask. Once you start pushing the boundaries of A64 overclocking, it can get a little complicated. But with good enough cooling, there's a decent chance a 3800+ will do 2.7GHz. 2.5GHz is pretty much certain, with a small voltage boost. An X2 3800+ at 2.6GHz will comfortably beat a stock-speed X2 4800+ in any test you care to name, despite costing four-tenths as much.

Even 2.7GHz, though, is only 12.5% higher than what I managed without changing the core voltage at all. So, in the interests of stability, I stuck with a humble 2.4GHz. At which speed WCPUID thought my processor was an "Athlon 64 10200+" (so did Astra32, by the way). Which was gratifying all by itself.

Every time I take a big CPU speed step like this, I like to play the Look How Much Faster game.

SiSoft Sandra 2005 reported 21602 MIPS and 9757 MFLOPS (from both cores together). This isn't comparable with the apparently-weedier specifications of supercomputers from days gone by, but it is at least vaguely comparable with past desktop computers, as long as they were tested with similarly pointless bit-twiddling big-number synthetic benchmarks.

My faithful old Amiga 500's original 7MHz 68000 CPU managed about 0.7 MIPS.

This machine's almost 31,000 times as fast.

And about a frajillion times faster for floating point operations, since the Amiga had no maths coprocessor, even after I upgraded it to a fire-breathing 5 MIPS '030.

(That was my second CPU upgrade. The first was a 68010, which shared with the similarly inexpensive "16MHz cacheless 68000" upgrade the thrilling fact that it made the Amiga maybe 5% faster, tops. And yet, people did it.)

The top of the PC line when the A500 first came out in 1987 was an 80386DX at 20MHz. That was a much faster chip than the Amiga's 1979-vintage CPU (the state of the Motorola CPU art in 1987 was the '030), but it still only managed about 5 MIPS and 1.5 MFLOPS. About 1/4300th and 1/6500th of this Athlon's both-cores-firing performance.

While we're gazing backward, how about good old Quake 2 in software rendered mode?

Back when DDR memory was the hot new thing, a 1.2GHz Athlon (single-core, of course, but Quake 2 isn't multithreaded, so dual core chips have very nearly zero advantage over singles) could strain its way to a playable-enough 31fps for the demo2.dm2 demo, in 1024 by 768 software mode.

My new box can do 39.5fps. At 1600 by 1200.

Not a big frame rate difference, but it's pushing 2.44 times as many pixels. At 1024 by 768, the 2.4GHz Athlon 64 manages 77 fps. This means it's genuinely faster, clock for clock, than the pre-64 Athlons.

This is unusual. Newer PC processors are usually slower, clock-for-clock, than the chips they supplant - which is why it was dumb to buy the first Pentium III, and the first Pentium II, and the first Pentium. The advantage of the new architectures is usually that they can run at much higher clock speeds than the old one could manage - or, at least, that they can after the manufacturers work the bugs out and do a core revision or two.

The original Athlon was no slouch in its day, primarily thanks to the input of some former DEC engineers, who grafted the Athlon's evolved-K6 core onto the EV6 bus DEC's Alpha processor used. AMD apparently chose the name "Athlon" as short for "Decathlon".

But, clock-for-clock, the A64's faster, before you even start thinking about its ability to run 64 bit operating systems that normal people don't care about yet.

Cool.

Want more pointless numbers? Of course you do.

As I write this, I've searched 4,219,157,393,126,296 nodes over the 1981 days I've been working on distributed.net's OGR project. For most of that time, I've had four or five computers chugging away at the task, whenever they're not doing something else.

The distributed.net cracker is happy to use multiple CPUs, and is also a fine example of a program that actually does something at least slightly useful, but has close to zero I/O load. So raw dumb processor speed makes the d.net cracker faster in almost perfect proportion to the clock speed and number of cores.

And it likes Athlons, too; they've always performed disproportionately well for d.net compared with Pentium Whatevers (though PowerPC chips are even faster - d.net screams on multi-core PowerPC Macs).

This 2.4GHz X2 computer by itself, with nothing else helping, could chew through as many OGR nodes as I've done already in about two-thirds of the time it's taken me.

Heck, it's so fast that it could search the entire RC5-72 keyspace in only 7.7 million years!

The RAM

Acute observers of the above CPU picture...

...would have noticed that I also appear to have some RAM with little lights on it.

Indeed I do. Here it is in operation, with the clear plastic air duct of the case (which I'll get to in the moment) making things look a bit more arty.

I went for two 1Gb modules of Corsair PC-3200 memory, rated for CAS 2 at 400MHz, at $AU437.80 the pair (as I write this, the same modules are more expensive; RAM prices fall as time goes by, as with every other computer component, but they're pretty volatile in the short term).

They've got lights on 'em because I bough the "TwinX Pro" flavour. They also have extra-big heat spreaders of questionable utility (though spreaders do give you something to grab the RAM by that isn't the chips themselves).

The LED bar graphs indicate the level of memory activity, and are very, very close to being utterly useless.

I suppose if you had a bunch of machines that were all supposed to be working hard, and you could see the RAM modules in them (because of your show-off windowed cases, or the geek's standard cooling improver, the Case With No Lid On It), you could see at a glance which machine had hung, or finished its job, because its RAM had few or no lights on. Unless, of course, the computer hung with something still pounding on the RAM, or you were running some slopsucker or other.

So, yeah. Pretty much useless.

Why'd I buy these modules, instead of the LED-less ones that have the exact same chips and cost less?

Because, on the day that I ordered, Aus PC had stock of the Pro modules, but not the plain ones, and I didn't want to wait.

Also, the LEDs don't actually cost very much. They've got more impact on the price of cheaper modules; if you're getting a couple of 1Gb sticks, you're only talking about 3% more money for der blinkenlichten.

The box

Silverstone's TJ06 is a good case. Solid, looks good, easy to work on, and it's got a novel cooling system which works well.

And it's not too expensive, either.

When I reviewed it a while ago, the black version was $AU214.50 including Sydney metropolitan delivery (delivery elsewhere in the country for big things like cases costs extra), the silver version was $AU225, and the black one with the show-off side window was $AU236.50.

Now the black and silver models are both $AU214.50, and the windowed version's dropped to $AU225.50.

So I got that, because I think eleven dollars is a fair price to pay for some show-off value.

Yes, new readers, your growing suspicions are correct: As with the others in my very occasional series of whole-PC reviews, I actually paid for this computer.

It really is the computer I'm using now, nobody offered it to me for a sum whose negotiability might perhaps have depended on how complimentary my review was, and it's not made out of parts that Aus PC Market are having trouble selling.

Also, to the best of my knowledge, I exist. I have also not been issued a White House press pass in return for specialised personal services.

By the way, you can't see the side window in the above picture of the TJ06, partly because the above picture is not actually of the version of the TJ06 that has a side window, but mainly because the window is on the other side of the case.

(Or is that the main reason? What is the main reason for the invisibility of a thing that isn't there, but couldn't be seen even if it were? Five page essay, due Wednesday.)

The window's on the other side because the TJ06 is a pseudo-BTX case, with the motherboard on the opposite side of the box and upside down compared with regular ATX cases. So the window's on the right hand side, as you look at the case from the front.

The motherboard

I based my new machine on an Asus A8N-SLI. The "SLI" part, in case you don't know what it is or still think it's something that became obsolete in 1999 or so, means you can install two Nvidia PCIe graphics cards and get... a lot less than twice the speed, for pretty much any real-world 3D rendering task you care to name.

ATI's alternative to SLI is called "Crossfire", and the arguably-worth-buying version of it (as opposed to the previous definitely-not-worth-buying version) is now actually finally more-or-less available.

Both SLI and Crossfire work with some mid-range cards, but you can get single cards that're faster, so that's not very exciting. To get an SLI/Crossfire system that's actually significantly faster than a single top-flight card, you need to buy two pretty-much-top-dollar cards. For the price of those two cards by themselves, you can buy a whole 3GHz P4 system, including monitor and keyboard and mouse and delivery, and I'm not talking about some corner-cut piece of brand name rubbish, either.

This kind of pricing is a sacred tradition, of course. Back in the first year or two of 3dfx Voodoo 2 SLI, that was pretty darn expensive too, especially when you added on the price of the normal video card you needed to do 2D mode.

Since I was actually paying for this computer, and I've only got a 21 inch monitor, my "SLI" motherboard has only one video card on it.

And that's all it'll probably ever have.

Why buy a motherboard with two x16 PCIe slots, which are only useful, so far, for video cards, and only put one card in it, if you don't intend to upgrade to two?

Well, x16 slots are compatible with cards made for the tiny x1 slot, so you don't miss out on anything if you get a mobo with an x16 instead of an x1. There's no point getting very excited about this, though, because the x1 slot has so far been a complete dud.

People who've been fiddling with PCs for a while will not be surprised by this, because little tiny slots are always useless.

The old little tiny slots were usually the Audio/Modem Riser (AMR) type. That actually was a standard, but a lot of people had never heard of it, even if their computer had such a slot. AMR slots could accept little tiny audio and/or modem cards, and sometimes there was actually a card in the slot, because the systems integrator that made the machine had crunched the numbers and found that the discount they got for 5000 units actually made the cards worth buying.

Home users did not get such discounts, and the cards didn't offer anything you couldn't get from other hardware that worked on any motherboard whether or not it had an AMR slot, so the cards were never worth buying for normal people. Few retail stores even bothered to stock them.

After AMR came CNR, which was better in theory but not in practice, and there was also Advanced Communications Riser (ACR), which broke with tiny-useless-slot tradition by using a full-sized (but reversed and offset) PCI connector. ACR's backwards compatible with AMR cards, and thus arguably twice as useful. But twice nothing is still nothing.

PCIe x1 really ought to be useful for something. It's got same theoretical bandwidth as a normal PCI slot, on a much better bus; it's not reserved for only one or two unpopular kinds of card.

But the Curse of the Little Slot has apparently struck again. There aren't many x1 cards, and the ones that exist are so expensive that nobody buys them. If you want a TV tuner card or something, you can get the same thing much cheaper for a plain old PCI slot, a few of which still come on all normal PCIe boards.

There's hope for x1, though. This Matrox graphics card looks as if it could be rather cool, if you can indeed stack all of your slots with 'em (including x16, since x1 cards work fine in x16 slots) and make a video wall.

But, again, that's not a big deal for most users, and it's not as if PCI graphics cards don't exist, and aren't cheap.

Incidentally, one market for x1 graphics cards is another blast from the past - brand name machines with "PCIe Graphics!!!" that don't actually have a PCIe x16 slot on the motherboard. They've got a (lousy) PCIe adapter built into the board, hardwired to the bus, and the only way to upgrade is with a card that fits one of the puny few PCIe x1 slots.

The same manufacturers pulled the same stunt with "AGP graphics!!!" machines in the past.

Remember: Friends don't let friends buy Dell desktops. Laptops yes, monitors yes (or, as I'll explain shortly, possibly "Yes! Yes! Oh, God, YES!"), desktops no. No matter how much lipstick their show pigs sport.

Expansion slots of all kinds don't matter much these days, for most users. It's normal for nice-mainstream motherboards today to come with a network adapter (gigabit Ethernet, yet), surround audio, and sufficient drive connectors for six or more devices, all built in as standard equipment. The only card you have to plug in - and the only card I had to plug into this machine - is the graphics adapter.

A lot of PC enthusiasts still use add-on sound cards, because integrated audio may have a lot of channels these days but still often does not offer the highest of fi. But all you need for a fancy audio board is one PCI slot. (Personally, I'm using this, which doesn't need a slot anyway.)

So motherboard choice isn't, in the final analysis, that critical. The A8N-SLI is made for the tweaker market and so offers high stability (by consumer motherboard standards) as well as its overclocking features. It's therefore not a bad buy even if you don't much care about overclocking.

Incidentally, on the left hand side of the above picture you can see the little nForce chip cooler (it's still referred to as a northbridge chip, though it technically isn't; if you're looking for an after-market cooler, try searching for "northbridge coolers"), whose fan bearings lasted only seven months of almost continuous operation before going noisy (I'm writing this at the end of those seven months).

You can oil the bearings of one of these teeny fans to get them to limp on a bit longer, but when a small fast fan starts buzzing, it's probably already past helping. My solution to this problem was to remove the little fan entirely (you can do that without removing the whole cooler assembly; three small screws under the blades hold the fan itself in place) and Blu-Tack (I don't move this computer very often...) the first 80mm fan I clapped eyes on (which turned out to be the clear yellow one from this old review) in its place.

That was an easy enough fix for me, but it wouldn't be for many computer users. Then again, seven months non-stop would be a year and a half of run time for someone whose computer is turned on for a more usual number of hours per day. Still, I've seen mobo-chip fans that last a lot longer than that, and there are also plenty of motherboards these days with big passive system-chip coolers or fancy-pants heat-pipe arrangements that let them use bigger, slower, longer-lasting fans, or no fan at all. There's even an A8N variant that's like that, now. If a board with a better mobo chip cooler doesn't cost a whole lot extra - and it generally doesn't - buy it.

We now return you to the rest of the review from January 2006.

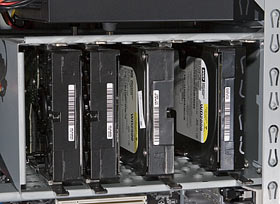

Because the A8N's an nForce4 board, you get four SATA drive sockets (supporting one drive each) and two PATA sockets (two drives each). This is a feature I actually care about, since I like just being able to stick a new drive in if I fill up yet another one.

This machine's got four hard drives and one DVD burner in it at the moment, so I've got room for another three drives if I want.

Also thanks to the nForce4 chipset, there's the usual RAID 0, 1 and 0+1, but you can spread the array across the eight possible devices if you like. Old "RAID-capable" boards only supported RAID for the four drives you could run from their separate secondary controller.

This is still the same cheap pseudo-hardware RAID the old boards offered, though, so don't expect to get monstrous speed gains from it, or use it to replace a proper industrial full-hardware RAID controller. But if you just want to make a medium duty array for bulk file storage or semi-pro video editing or something, it'll work.

And the A8N's got a built-in gigabit Ethernet adapter, passably fancy audio, and a bunch of USB ports (or not so many, if you don't plug in enough little case cables). And Windows-based overclocking software that isn't really worth using, and full proper overclocking features for people who know you're meant to do this stuff in the BIOS setup program.

High-spec tweaker-boards like this have been cheap enough, for long enough, that you might as well buy them even if you don't care about most of their features. The A8N-SLI's a bit more than $AU200 delivered from Aus PC Market at the moment; the non-SLI variant is a bit less than $AU200. The cheapest mobos that're at all worth buying are around the $AU100 mark, delivered. There's just not much to be saved.

Yes, you can find ECS or Jetway or whatever boards, that support current-model CPUs, for less than $US50 delivered.

If you buy one, you deserve what you get.

The video card

A large portion of the video card market still wants AGP, not PCIe, cards. They don't want to swap out a perfectly good motherboard just for a new graphics card. Fair enough.

There is, however, at least some good reason to go to PCIe, now. It was a frankly dumb idea in 2004 and for a fair bit of 2005, but now it makes sense.

The boring GeForce 6800 that's sitting, probably perfectly undamaged, in my old machine, is still faster than what most people are running. It's more than adequate for smooth enjoyment of current games at impressive resolutions. But it's an AGP card, and the new machine, she don't got an AGP slot.

What usually happens when there's an interface change like this is that the new cards - or RAM modules, or hard drives, or CPUs - start out unfeasibly expensive, partly because they're so new and partly because wholesalers and retailers are worried about getting in much stock, and the manufacturers themselves are often worried about making lots of units, lest the demand not turn up and the hardware go stale on the shelf.

After a period of volatile stock-market-ish supply and demand agitation, the market and the vendors finally click into sync and the product starts flowing, whereupon the price plunges, and suddenly the old technology starts to become a lousy deal compared with the new stuff.

So it's been with PCIe and AGP.

Aus PC don't get a lot of call for AGP 6800s any more, but you're looking at about $AU450 delivered for a new one.

But PCIe models are thick on the ground. You can get a basic one (not the cut-down XT version) for less than $AU300 delivered. And that's with 256Mb of video memory, not the mere 128Mb I had on my AGP card.

I wasn't going to settle for a mere 6800 in the new machine, though, so I stared at the price/performance graph for a little while and opted for a 7800 GT.

Which I may, depending on my strength of will, soon be trading in on something better.

This is not because a 7800GT in any way sucks for games. It's a very marginally cut-down 7800GTX at a better price; my 256Mb Leadtek GT goes for a mere $AU693 delivered (as usual for video cards, umpteen different brands are made in the same factory; buy based on price, not name), while the GTX costs about $AU100 more. The real loonies can drop more than $AU1200 for the "frankly, we're surprised anybody bought one" 512Mb super-GTX.

But you name it, a 7800GT will play it. Nicely. At 1600 by 1200 (or the widescreen near-equivalent), with a soupçon of anti-aliasing and anisotropic filtering.

It's got two DVI outputs (adaptable to VGA, so you can drive any kind of pair of monitors), it's got TV out, you can run it in SLI mode.

It's nice. No current game stretches it.

It can't run every AVS preset at a consistent 60fps at high res, but Deep Thought couldn't do that.

The 7800GT is an entirely satisfactory video card, as you'd darn well want it to be for the money.

But the 7800GT can't drive the Screen Of Your Dreams.

Dell, you see, have now actually released their monstrous 30 inch, 2560 by 1600, UltraSharp 3007WFP LCD. The 3007's even available on the Australian Dell site - though how much stock they've got, I don't know. Dell's previous flagship LCD, the 24 inch 2405WFP, was briefly on sale in Australia and then vanished for many moons as Dell tried to satisfy global demand; so may it be with this new one.

If, like me, you've got a "21 inch" CRT monitor - with a 20 inch actual viewable diagonal, and the ability to display 1600 by 1200 tolerably sharply - no ordinary LCD replacement is a very exciting prospect.

An LCD won't look very much better than a CRT unless you're a goose who can't set up a CRT properly. LCDs have sharp-edged pixels, but that's not, if you ask me, actually a selling point. And consumer LCDs still don't have quite the colour rendering accuracy of a CRT.

And, of course, normal LCDs just don't give you much more screen area, or resolution, than 20 inches of CRT.

The 2405FPW is a 1920 by 1200 widescreen; 1.2 times the pixel count of a 1600 by 1200 monitor. That doesn't hurt, but it's not all that thrilling either.

But a 30 inch monitor is bigger than a lot of people's televisions (how many geeks, I wonder, have been standing in their living room with a tape measure looking awestruck?). And the 3007WFP has two point one three times the pixel count of 1600 by 1200.

A monitor this big and with this resolution is not at all a new thing. Apple have been selling their 30-inch Apple Cinema HD Display for ages now. The Dell has better official specs, which may or may not be a bunch of hooey; LCD brightness doesn't matter (full brightness from any modern LCD is Too Damn Bright for any properly set-up modern workstation), and contrast ratio and response time specs are poorly defined and unpoliced.

And, apparently, the initial 3007s will use the same LG panel you get in the Apple 30 inchers at the moment. But Dell may switch to Samsung panels later on. Or maybe not. Perhaps the Apple's lower specs are just what its specs were when it launched, with a different model of panel, or just an earlier revision of the same panel. Life contains many mysteries.

The important part is, the Dell's a bit cheaper. Not as truly impressively cheap as the 2405FPW was when it launched (hence the shortages...), but still a bit cheaper than the Apple.

Here in Australia, the 3007 lists for $AU2898, which actually converts to a slightly lower price than its US list of $US2199. It's $AU1101 cheaper than the Apple alternative, which has been sitting on $AU3999 locally for some time. The 3007 also won't make a PC user look as if they meant to buy a Mac but ran out of money after getting the monitor.

(Oh, and the 3007 also doesn't come with a bunch of Dell's patented "hahaha, dumbass" EULAs for software that you don't even know what it is until you say "yes" by pressing any key, including "N" and "Escape".)

But. There is. A catch.

You can't use either 30 inch LCD unless you've got a dual link DVI graphics adapter.

Dual link DVI is not the same as just having two DVI connectors on your card. It's a single connector with two data channels, for double the bandwidth, which you need to paint that many pixels in a timely fashion.

The designers of DVI were somewhat stingy, you see. Single link only goes to 1920 by 1080 at 60 frames per second.

Now, technically you can use one of these monitors from a regular single link card. You won't be able to run the monitor at its native resolution, though - you'll be stuck in fuzzy interpolated lower-res mode and feeling like a schmuck.

If you've got dual link, though, everything Just Works. And the Dell is even meant to work with a PC; the Apple will, but Apple's support won't want to know you if you have problems.

The 7800GT can be a dual link card. Look at the Macs with 7800GT adapters; they're definitely dual-link-enabled, of course, because it'd be a bit embarrassing for Apple if they made a big fancy current-model computer that didn't work with their best monitor.

The 7800GTX can be dual link, as well - on both of its outputs, not just one.

I think the deal is that all flavours of the 7800 chipset are dual-link-capable, but it's up to the card manufacturers whether they use that feature - and if they can save ten cents by not using it, they probably will, because practically nobody's using monitors that big yet. Nvidia don't help at all, here; they just don't tell consumers about dual link capabilities for anything but their pro "Quadro" cards, because they'd rather you bought those.

Anyway, this card probably has one dual link port. All of the current Leadtek 7800s seem to have one; this card doesn't quite match the current Leadtek 7800GT in appearance, but only because of the decorations on the chip cooler. So maybe I'm all right.

Otherwise, though, I'll have to buy a GTX if I want to use a 3007WFP.

Or, perhaps, I'll get a Radeon X1600 or X1800; they've both got dual link, too. ATI have been much more forthcoming than Nvidia on the subject; every X1000-series card except the X1300 has dual link.

Both of the 512Mb 7800GTX's ports are dual link, though, so you can run a pair of 30 inchers. Ay caramba.

If you're not anticipating paying the price of a nice computer for a screen, though, you may buy a 7800GT with confidence, and ignore entirely this ridiculous situation where lots of people don't exactly know what kind of outputs their video cards have.

All 7800 cards, incidentally, seem to overclock pretty well. Which is to say, you can get real frame rate gains out of overclocking them, not that you can wind up one or another clock speed by 75%, feel all studly, and then barely be able to even measure a difference, much less see it.

The GT has 20 pixel rendering pipelines versus the GTX's 24, and seven vertex pipelines to the GTX's eight - much less of a loss than you usually have to put up with in a cut-down card, though it's not that much cheaper than the GTX, either - and is supposed to run at 400MHz core, 1000MHz RAM, versus 430 and 1200MHz for the GTX.

(When you're in 2D mode, all of these cards run the core at a much lower speed - probably 275MHz. The card's still a zillion times faster than it needs to be for any 2D task, and draws less power, and runs its giant-mosquito cooling fan at a pleasantly low, bearing-saving speed.)

With the CPU and video card both at stock speed, my new PC managed 6166 QuestionableMark05 3DMarks, at the default boring 1024 by 768 resolution but with antialiasing and anisotropic filtering both locked to 4X, to load the video card up a bit.

6166 is probably some kind of Satanic number if you try hard enough. But I wanted something even more impressive.

Many GTs can be overclocked up to around 470/1200MHz. Mine was happy as a clam at 451/1200. At that speed, with the CPU still sitting at its stock 2000MHz, I got 6991 3DMarks - a 13% improvement. Which you might actually notice.

With the CPU at 2.4GHz and the video card overclocked, I got 7090 3DMarks - an improvement of an impressive 99 3DMarks, or a miserable 1.4 per cent, depending on whether you're in the business of marketing or of truth.

The reason why there was pretty much no improvement at all over the no-CPU-overclock score is that the main look-Joe-Consumer-here's-a-simple-number 3DMark05 score is calculated only from the game test results, not the CPU test ones. In the CPU tests, the frame rate always dribbles around in the low single digits, because the processor's doing the graphics card's job for it. In those tests, a 20% overclock will give you something approaching that same percentage in improved frame rate, and accurately represent the improvement you'll see in a CPU-limited task. But it won't affect the big bold 3DMark number at all; only whatever small contribution the CPU makes to the 3D-accelerated tests will count.

Turning off the 4X FSAA and AF gave me a chunkyvision-mode score of 7742, less than 10% faster. This showed that the video card actually wasn't very loaded-up by the extra rendering of FSAA and AF, and had a lot more to give.

As I said, a 7800GT really is a rather fast card.

The drive

The new machine needed a new boot drive. Even if I decided to penny-pinch and recycle the old machine's drive, it was a mere 120Gb unit that had a lot of miles on it.

The old drive seemed fine while I was using it in USB-adapted mode to harvest old config files and such (they're on my backups, but they were more up to date and all in one place on the drive itself). Until, about half an hour ago, I knocked it off a shelf with my elbow and it hit the hard floor while running. Now it's a magnet donor, and I'll never know how long it would have lived.

In constant use, about two years is all you should expect from a consumer drive. Some survive far longer, but you shouldn't bet your happiness on it. Consumer drives expect to spend a lot of their life turned off or in spun-down sleep mode - that's why they spin back up much faster than server drives do. Consumer drives are much cheaper per megabyte than server drives, but they're not as well made; you should expect to trade 'em in pretty regularly. I think that's a perfectly good deal, as do most other people who check out the price/capacity graphs for these things over the years - but if you expect your drive to last five years or more, you may well be disappointed. Be warned.

The drive I went for is nothing very exciting - a 200Gb Western Digital Caviar SE. Good value, and good performance, for what it is - which is a nice undemanding 7200RPM consumer drive with a small-ish price tag.

(Feel free to opt for something flashier if you like.)

The Caviar SE's minuscule performance edge over cheaper drives of the same capacity comes partly from its 8Mb of cache memory (which is a pretty unremarkable feature, these days), and partly from its use of the 300 megabyte per second Serial ATA (SATA) protocol, instead of the original 150Mb/s version (with which the newer version is backward compatible).

The Serial ATA International Organization (SATA-IO) is a bit tetchy about people calling this interface "SATA II", because that was actually just SATA-IO's name while they were working on the SATA standards.

The second revision standard is, correctly, called... well, actually, their naming page doesn't really tell you. SATA/300 seems to be acceptable.

The nForce4 chipset supports SATA II Slash Whatever, so a drive like this should be running at

its full 3GHz clock speed and 300 megabyte per second bus speed on an nForce4 board like the A8N-SLI. The difference

you can expect this to make to your computing experience is three-fifths of sod-all, though; the peak bus bandwidth

only affects transfers to and from the drive's cache memory, and even for an 8Mb-cache drive like this one, a small

fraction of one per cent of the drive's capacity is the most that can be cached.

Or, more practically, the cache will fill or flush in fifty-three milliseconds even at only 150Mb/s. Compare fifty-three milliseconds with the total time taken for any significant data transfer, and you'll see that getting that first quick squirt of data dealt with in only 27 milliseconds instead of 53 isn't actually going to rock your world.

The very fastest 7200RPM drives around today have a peak raw read rate up around 70 megabytes per second. 10,000 and 15,000RPM drives manage more (it's not as straightforward as you might think, given different platter counts and the fact that the faster drives have lower capacities and so less data moves under the heads per revolution), but they need extra cooling, and 15000RPM drives are all still SCSI anyway.

Even if you could get a really fast drive drive with a SATA interface, though, 150Mb/s SATA would still give it some headroom. Not as much as you might think, thanks to the overhead that means you can never quite blow as much data down a drive cable as the spec says you can, but still some tens of megabytes per second.

So, as with previous drive bus speed hikes, ignore the speed numbers. If you're not building a serious file server, video editing machine or similar disk-whipper, and if you're not Joe Consumer whose big-brand box has much less RAM than it ought to have, none of the variables affecting hard disk speed matter much.

Oh, yes. There's a DVD+-R/W-tilde-backtick drive in my computer as well. It's a Pioneer DVR-109, which I cannibalised from the old machine. Like every current model DVD burner, the 109 can write faster than most media will allow, and is cheap. Actually, the 109's been superseded by the DVR-110, but even that only costs about a hundred bucks Australian, delivered.

These sorts of prices are making us all soft. It's good that Blu-Ray and HD-DVD are coming along to drain our wallets again. Although it disturbs me that we may be robbed of the added thrill of possibly having bought the Betamax of HD optical disc.

The power supply

If you're building a monster system today - dual core and SLI - you need a beefy power supply.

That's "beefy" compared with the PSUs we used to have in our 450MHz Celeron 300A boxes, though. PSU manufacturers have gone a little bit crazy lately.

It's easy to find PSUs with ratings above 500 watts today. Some of those ratings are even honest. And, yes, you can get a thousand watt rating if you like.

A higher output rating doesn't mean a PSU draws more power. Into a given load, all PSUs will draw roughly the same slightly higher amount of power (the excess being wasted as heat) out of the wall, depending on their efficiency (higher efficiency, less heat). Higher-rated PSUs can just handle a bigger load without misbehaving - losing voltage on one or more rails, overheating, popping an internal fuse, or whatever.

My single-video-card machine doesn't need a huge PSU. The CPU needs a fair bit of juice, the single video card's not a lightweight, but about all there is to worry about beyond that is the bank of hard drives, which are no big deal. An honestly rated 350 watt PSU should have more than enough power to run it.

But where's the fun in that?

The layout of the Silverstone case meant I wanted a PSU that moved a fair bit of air.

This case has a 120mm fan at the front and another one at the back - but they do little more than feed each other, though a clear duct that channels air over the CPU.

That's a good thing, but it leaves the hard drives out. They're cooled by the air the PSU sucks through itself. So I wanted a really, um, sucky PSU.

My combined desire for air flow and a PSU that could light a football stadium while running my PC led me to choose a Silverstone ST65ZF. It has a six hundred and fifty watt rating, and ticks in enough spec-sheet boxes to suggest that it might actually be able to deliver something approaching that much power.

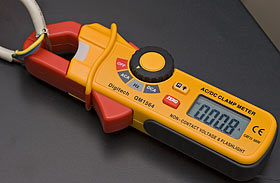

When I actually gave the CPU, video card and one hard drive plenty of things to do while running the computer from a Cable Of Dangerousness with a clamp meter on it...

...the meter told me that the computer wasn't even drawing two hundred watts.

(Australia has 230-volt-nominal mains power; 230 volts times 0.8 amps is 184 watts - 193 watts if the meter's rounding off from 0.84 amps. If you're in the USA or some other 110/120V country, your computer will draw twice as much current, but the same power, all things being equal.)

Because the ST65ZF, naturally, has power factor correction, the current a simple non-RMS meter like this says its drawing is probably very close to what it's really drawing.

If I really pushed everything to the limit - copying files from everywhere to everywhere else, playing a game, burning a DVD, finding alien messages in pi - I might be able to get this machine up to 250 watts or so.

This PSU would continue to feel like Superman at an arm-wrestling contest.

The ST65ZF is currently selling for $AU216.70 delivered. If I hadn't opted for More Power, I could have had a perfectly nice Antec for slightly more than half the price.

But it's good to know that if I decide to enhance my computer's cooling by adding a twenty amp automotive radiator fan, I can do so without compromising system stability. At least until severed fingers start splatting onto the video card.

Until I perform that minor upgrade, this PSU's only got one 80mm fan, which is thermally controlled. So it starts out quiet, but it moves plenty of air when it gets up to speed - which it does in quite short order, when the PSU starts sucking air over the hard drives.

The drives could still be better cooled, but I don't know whether it'd make any difference. It doesn't hurt to move a whole bunch of air over your drives, but consumer drives expect to be sweating away inside a box that had inadequate ventilation even before the air intake inhaled the family pomehuahua. Just because a 7200RPM drive is too hot to keep your hand on doesn't mean it's headed for an early (or, at least, earlier) grave.

Overall

Whew.

It's not all that amazing that people just throw up their hands and buy a brand name computer, is it?

Fortunately, you don't actually have to do heavy research to get a fast, good value new PC.

Lots of clone dealers offer pre-configured systems and let you mess around with the setup yourself - Aus PC do. I actually came up with my machine by starting with AusPC's, ahem, Ultralord SLI system, and then stripping out a bunch of stuff.

All you have to remember are a few rules. Chief among which is that the top-spec video card and CPU is always disproportionately expensive for what you get. That's been true for a long time, and will stay true for the foreseeable future.

Also, don't be too worried about CPU upgradability. It's nice to be able to boost your system speed by just dropping in a new chip, but the world doesn't often actually let you do it. If you buy a machine with a cheap budget CPU and, later, swap in what was once a stupidly expensive 2.5-times-as-fast flagship chip but now goes for $43 on eBay, fair enough. But, often, other standards move on in annoying ways, so you need a new motherboard anyway for that hot new graphics card, or now-perfectly-affordable RAM standard, or whatever; as once-exotic technologies move into the mainstream, older machines can become uneconomical to upgrade in comparison.

This is particularly pertinent right now, because the recently released Athlon 64 FX-60 is the fastest chip that'll ever be released for Socket 939, which my computer uses. AMD are moving to Socket AM2 for their future processors.

But I don't care. By the time a fast enough AMD chip comes along for me to want to upgrade to it, I'll very probably want a new mobo anyway - and, of course, my next PC may well be another Intel box.

(Hmm. "Core Quarto", anyone?)

I'm very happy with this computer and, despite the expense, kind of glad that the old P4 finally bit the dust.

But that brings me to another, more recently formulated rule, which'll be relevant for a while yet.

If you've got a 3GHz-plus single-core Pentium 4 (like my old machine) that's chugging along happily (unlike my old machine), or a similarly speedy Athlon, there is still, incredibly enough, little reason for most people to upgrade.

If you're into 3D games, then you might be pleased by a better video card, and maybe you'd go to PCIe just for that. You might also want more RAM or hard disk space - or just a new hard disk, if yours is approaching its use-by date.

But a CPU that was speedy three years ago is still perfectly acceptable today.

Three years took us from the 300MHz P-II to the 1.5GHz P4. That wasn't a very exciting processor compared with its P-III and Athlon peers, but you get the idea. It was a big darn change.

Over the last few years, though, the CPU makers just haven't been able to make their processors run a whole lot faster. They've edged the clock speeds up slowly, they've made new chips of various types - the Pentium M is a darn good piece of hardware - but they couldn't wring anything like the usual 18-monthly doubling out of the technology.

Hence, dual core chips. Intel and AMD want everybody to think they need them.

But most computer users don't need dual core, and indeed won't even notice the difference.

Don't believe the hype.

The bits I bought

Readers from Australia and New Zealand can click here to order PC parts - or whole PCs - from Aus PC Market. (They don't deliver outside Australia and New Zealand.)

AMD Athlon 64 X2 3800+ CPU

Click here!

Asus A8N-SLI motherboard

Click here!

Leadtek GeForce 7800GT 256mb PCIe x16 video card

Click here!

Two 1024Mb sticks of CAS 2 PC3200 Corsair TwinX Pro RAM - with flashing lights!

Click here!

(For the functionally identical no-LEDs version,

click

here.)

Black Silverstone TJ06 case with side window

Click here!

Silverstone ST65ZF 650W PSU

Click here!

Western Digital 200Gb Caviar SE SATA/300 hard drive

Click here!