Comparison: ASUS AGP-V6600, ASUS AGP-V6600 Deluxe, Leadtek WinFast GeForce 256 and Leadtek WinFast GeForce 256 DDR graphics cards

Original review date: 18 November 1999. Last modified 03-Dec-2011.

NVIDIA's GeForce 256 chipset powers the fastest PC video cards on the market as I write this. Graphics cards based on the GeForce have been widely hailed as tomorrow's video hardware, today. Their hardware transform and lighting support (see the sidebar to the right) lets them rapidly render scenes of phenomenal complexity, opening the door for much more impressive 3D applications - which, mostly, means games.

But that doesn't mean it's a great idea to buy one right now. Sure, GeForce boards can render amazing graphics. But they're still well ahead of the curve; there's nothing else out yet with hardware T&L. S3's new Savage 2000 approaches GeForce speed, and ATI's ludicrous twin-chipset Rage Fury MAXX has quite imposing straight-line performance, but nothing else is even in the running yet. Hence, there are no games out yet that let a GeForce really show off its power.

ASUS' AGP-V6600 is your basic 32Mb GeForce board, with a simple VGA connector on the back and no frills. It, as of this update on the 30th of March, 1999, is selling for $459 (Australian dollars). It's now cheaper than...

...Leadtek's WinFast GeForce 256, which has a composite and Y/C TV output (if you don't know what these video terms refer to, check out my guide here) as well as the ordinary monitor connector, and now costs $475.

The AGP-V6600 Deluxe is a much more featureful version of the plain V6600. It has PAL and NTSC S-Video (Y/C) and composite video input and output , LCD 3D glasses (which I review separately here), and hardware temperature monitoring and fan management, which is a significant feature all by itself which I'll deal with in the overclocking section below. The Deluxe card also comes with SGRAM memory instead of the V6600's SDRAM, which permits substantially higher memory clock speeds, although these have little real impact on performance. Its bundled software is more impressive, as well.

And you'd want it to be, because the V-6600 Deluxe costs $585 Australian.

If too much speed is barely enough for you, though, your decision has already been made - you want a DDR GeForce. Australia waited a while for these, but they've been freely available for a while now and they're selling like hot cakes.

Leadtek WinFast GeForce 256 DDR cards are still walking out the door, and they now cost $AU560, or $AU585 for a newer version with a bigger chip cooler. Like the cheaper Leadtek board, the DDR models have a TV out, but no other frills.

Sticker shock

It's been a while since a mainstream PC 3D accelerator has commanded this kind of price. Everything in the 3dfx Voodoo 3 line-up except the 3500TV with everything that opens and shuts ($570 Australian, at present) costs much less than even a plain GeForce, let alone the V6600 Deluxe. And a 16Mb TNT2 Ultra card will set you back less than $AU250. There are lots of TNT2 Model 64 cards (I review a couple here) for around the $AU200 mark.

I'm running a V6600 in my main machine at the moment, simply because it showed up first; my old ADI 17 inch monitor can't do high enough resolutions to make a DDR board worthwhile (more on this subject later...).

My standard video card prior to the V6600's arrival was a cheap OEM Diamond Viper V770 (reviewed here), running a bit faster than sticker speed at 140MHz core and 165MHz memory speed. You can get a V770 for well under $250 these days. So the big question is - does the GeForce alternative have the mumbo to justify the extra money, or should you wait until more games use its power, and its price is lower?

What you get

V6600

As well as the card itself, the V6600 box contains a slim-but-adequate manual, the driver software disc, and ASUS' current couple of freebie games, Extreme G 2 and Turok 2. Both of these are a bit long in the tooth now, and certainly don't stretch the GeForce board's capabilities, but they're better than the usual Taiwanese bundle-fodder. If you don't care about bundled games, rest assured that these two simple pack-ins can't be adding much to the purchase price.

The ASUS driver CD, which comes with both V6600 versions, includes Win95/98 and NT4 drivers (the v3.48 NVIDIA Detonator driver set and an ASUS installer program, which you don't have to use), and the DirectX 6 and 7 installers. There's also supposed to be a manual, in Adobe Acrobat format, but I couldn't find it for the life of me. The Acrobat installer was there in various different versions, but there was no manual to display with it! No matter; the paper manual is fine.

The ASUS CD also gives you the NVIDIA GeForce demo suite, including the widely-circulated TreeMark and the Wanda demo, which demonstrates how realistic a human figure can be when you've got enough rendering grunt to play with. It notably does not include the hotly anticipated Dagoth Moor Zoological Gardens demo, which will apparently be available shortly.

I also had a play with ASUS PC Probe, which doesn't come with ASUS video cards but does come with ASUS motherboards; you can also download the latest version from this FTP directory. It's a quite well engineered hardware monitor program that lets you keep an eye on, and set alarm levels for, any parts of your PC that your BIOS is capable of monitoring. PC Probe also provides quite a lot of general system information data, and is a great deal better than the dodgy system info utilities that come with many cheap video cards.

V6600 Deluxe

The V6600 Deluxe bundle is much more impressive. It includes Drakan: Order Of The Flame, which is unquestionably the finest game in which a comely wench rides a fully aerobatic dragon and is an excellent show-off title to impress the hoi polloi with the studliness of your video subsystem. Unusually, for look-what-my-new-video-card-can-do bundled games, Drakan is both a recent release and a lot of fun, with outdoor dragon combat and indoor vaguely Tomb Raider-ish third person action.

You also get Rollcage, a fun racer-shooter from Psygnosis.

ASUS also bundle in their ASUSDVD DVD playback software, normally a $AU30 extra, with the Deluxe card. ASUSDVD is a pretty good software player, but is of course no use if you don't have a DVD-ROM drive in your computer.

To go with the video in and out options there's Ulead VideoStudio SE, an unexciting but serviceable video editing package.

The 3D glasses that come with the V6600 Deluxe are ASUS' standard VR-100 model, which I review here. In a nutshell - don't get too excited. No LCD shutter-glasses I've ever seen are all that great, and ASUS' version is below average. The software is a lot better now than it was when I first reviewed the glasses, and they do let you play most games with genuine honest-to-goodness 3D vision, but setup is still a bit annoying, they're not comfortable, and a combination of monitor persistence and imperfect opacity means they can't help but have annoying ghosting problems.

The V6600 Deluxe also works with the ASUS VideoSecurity program included on the driver disc. This disc is similar to, but not the same as, the one that comes with the plain V6600. VideoSecurity, along with a video camera, lets you use your PC as a security system. It monitors the input from the camera regularly, and when it changes significantly it can sound an alarm, capture a sequence of frames, and/or dial a pre-set phone number via your modem and then play a sound clip down the line, provided you modem has voice capabilities (most do, these days).

The VideoSecurity installer has, unquestionably, the ugliest interface buttons that I've seen this week, but it works. You can set an automatic schedule for the security monitor to run, so it kicks in outside working hours, for instance.

The running interface is rather better:

OK, so I didn't have a camera handy. So sue me.

You can set a rectangular area of the image to monitor, so trees waving outside a window won't trip the system, but someone opening a door will.

The VideoSecurity program includes as its default sound the least effective warning message WAV file that could possibly exist. Practically inaudibly, a Chinese-sounding female says "Asoos video security warning - if you hear this message press any number". Recording your own message is... well, it's more than highly recommended, if you ask me.

This isn't an industrial strength security system - anybody who wants to trust their home or business to the stability of a Windows box needs their head read - but it's not blooming bad for bundled software.

The Deluxe card also comes with a CD packed with game demos, plus composite and Y/C video leads and a Y/C to composite adaptor cable.

The V6600 Deluxe video output, like the Leadtek card's, is of the "convertible" kind; a Y/C connector which, with a special adaptor, delivers composite video from a couple of pins unused by the Y/C cable. This means the adaptor is no use for converting other Y/C connectors to composite.

(Remember, I decode this video terminology here.)

WinFast GeForce 256

The Leadtek software bundle is better, as long as you don't want games; no entertainment titles come with either WinFast. What you do get is, I think, exactly the same collection of software that comes with all of Leadtek's current 3D cards.

There's the WinDVD package for DVD playback (provided, of course, that you have a DVD-ROM drive). There are much cheaper ways to get DVD playback happening on your PC (for one of them, and a look at how the other half lives, see my recent review of AOpen's Artist PA50V here), but TV out is a cool feature, and it's not as if the Leadtek cards are unusually expensive because of it.

The WinFast driver CD is one of Leadtek's usual efforts, containing drivers for every video card they currently make. You get specific Windows 2000 drivers along with the 95/98 and NT ones, which is a nice touch.

There's also Digital Video Producer from Asymetrix, a quite powerful but fairly awkward digital video editing package, for use with WinFast card with video input (in other words, neither of these ones). While Digital Video Producer is neither brand new nor an especially good example of the breed, it's not blooming bad for shovelware.

Apart from that, the Leadtek software bundle is underwhelming. There's WEB 3D, which is a "3D Web authoring tool" which is about as useful as it sounds. There's WIRL, a 3D-viewing browser plug-in which is approximately one hundredth as popular as VRML, which is in turn used by roughly nobody. There's the questionably named 3D/FX, a graphics and animation tool which is conclusively better than anything you could get for the Apple II. There's VRCreator, of which it can be said that of all the seldom-used pieces of software spawned by the mid-90s excitement about virtual reality, it is definitely one of them. There's also a demo of Datapath's RealiMation STE, a 3D design and visualisation utility about which I know nothing much and wish to learn little.

If you're into serious graphics work, you may get some use out of the 3deep and Colorific software included, for colour calibration.

The Leadtek cards also comes with composite and Y/C video cables to go with its video out, and a Y/C to composite adaptor cable just like the V6600 Deluxe's.

The Leadtek bundle includes absolutely nothing, demo or otherwise, that's specifically for the GeForce. No TreeMark, no Bubble, no Wanda. And no games. Hey, you get TV out and you pay less, and the DDR card is, as we'll see shortly, very very fast indeed. Don't complain.

You can download TreeMark, by the way, from here.

GeForce demos

The GeForce demos included with the ASUS boards are still in a somewhat beta-ish state, and so is their install program. It's meant to install a file called "msvcp60.dll", which lives in the demo directory on the CD, to the windows\system directory, but it doesn't. Well, it didn't for me and for at least one correspondent, anyway.

Without that DLL, the demos don't run. Copy it by hand and all is well.

Wanda. I find her more creepy than cute.

Various Lara Croft lovers have expressed somewhat disturbing emotions about the Wanda demo; personally, I was more taken with the Bubble demo, which presents you with a shiny blob that you can poke with the left mouse button. The realism of its undulations is pretty darn startling.

It's been observed, however, that the bubble's gorgeousness will not be seen as anything other than an occasional special effect in any real applications for the GeForce. The bubble uses cubic environment mapping (you can switch it to the simpler spheric mapping, whereupon it gets some funny convergence artefacts at either pole), but for this to work in a dynamic environment six textures have to be rendered constantly to be mapped onto the object that's reflecting them. Which takes a whole lot of grunt.

There's a variant of the Bubble demo called Guts that demonstrates this; this time, the bubble's flying down a somewhat intestine-ish tube-of-tubes. Guts only ever seems to run properly once per session on my computer (more beta behaviour...), but even when it's running, it ain't running fast.

It doesn't score much more than five frames per second with a high detail bubble. This would hurt a lot in a game; if your frame rate plummets every time a cube-mapped entity shows up, you'll be turning that feature off pretty swiftly.

Still, it's fun to poke the bubble.

Shut up. You know what I mean.

NVIDIA's page of GeForce technical and benchmarking demos is here, by the way.

Setting up

Physically plugging a GeForce card in is no more difficult than installing any other AGP graphics card. This, of course, requires that you have an AGP slot, and preferably a fairly new motherboard.

Old or suspiciously inexpensive motherboards which have an AGP slot, but use a linear regulator for their 3.3 volt power supply, are a recipe for disaster when you're setting up a current 3D accelerator. Linear regulators are good for only 2.5 amps, while the switching regulators on better boards can deliver up to 6 amps. And they need to, too; even some switching regulator boards don't cut it with current cards. 3dfx's upcoming Voodoo 4 and 5 products will have, respectively, a hard-disk-style power receptacle and a whole separate dedicated power supply, to address this problem.

If you don't know what kind of regulator your motherboard has, check with the manufacturer. Most big-name boards from the last year or two should be fine. Emphasis on the should.

Athlon users have also reported various problems with GeForce boards, which may be fixed by the specially updated drivers available from NVIDIA's standard software download site here.

Installing the Windows drivers for the cards is simple enough. Both manufacturers provide special installers for their drivers, but you can also install them by the usual Add New Hardware... method. The ASUS installer is supposed to let you uninstall the drivers afterwards, but it never seems to - this is a problem previous ASUS boards have had, too. You just get an error message telling you that you can't uninstall because you didn't install the drivers with the ASUS setup program. It doesn't matter how the heck you installed the drivers; you can't get rid of 'em.

This is a pretty normal state of affairs - most driver sets don't even appear as uninstallable software in the Windows Add/Remove Programs list. It becomes significant, though, if you're hoping to shuffle drivers or even video cards a few times without tying Windows up in knots. From bitter experience, I know that swapping similar video cards and similar drivers around can result in a nasty hodge-podge of files from different driver sets and a half-busted video setup.

You can often escape the mire by switching your video card to plain VGA and then reinstalling the driver set of your choice - and, always, ignoring the ready-installed driver list that Windows presents when you tell it to change the drivers, and manually pointing it to the location of the files using the Have Disk... option. But if this doesn't work, it's reinstall time.

Leadtek's installer is squirrelly in a different way - if you run the setup program, the drivers are installed. Wham. You don't get to click an install-the-drivers button. This may seem obvious, but it's not; most setup programs on driver disks at least give you a "Proceed" button, if not a menu of different installable things. Woe betide you if your driver setup is just fine, using the reference drivers, and you run the Leadtek installer by mistake.

Serious driver hassles aren't likely with either board, mind you. If you've got a TNT-series card at the moment, and you're running the current NVIDIA driver set (get it here), you can just power down and swap cards and your existing drivers will keep right on trucking with the new board. If you've got some non-TNT card, its drivers probably won't argue with the GeForce ones at all - although it's still a good idea to switch to the plain VGA driver before you install the new graphics card, especially if you're trading up from a PCI card to the AGP GeForce.

The standard ASUS drivers work just fine, because they're reference NVIDIA drivers practically untouched by ASUS. Some earlier ASUS efforts were painfully unreliable. They're only the 3.48 version, though; v3.53 is out as I write this, and that's the driver set I checked the plain V6600 out with. The Leadtek drivers seem all right too, which is to be expected; Leadtek's video card driver team always seems to be on the ball; there is as I write this a v1.06 driver release out for the DDR card, against the v1.05 drivers on the CD; you can get the latest Leadtek DDR drivers from here.

Winding 'em up

The stock clock speeds for the GeForce 256 are 120MHz for the core logic and 166MHz for the memory. All of the boards stick to these numbers by default, but it's easy to run them faster.

In case you're wondering why the clock speed looks so similar to that of the old TNT2 (125 and 150MHz, stock), don't be alarmed; at the same clock speed, you can expect the GeForce to have about twice the TNT2's rendering power, because of its four-pixels-per-clock design, versus the TNT2's two-pixels-per-clock. The GeForce is, in this respect, essentially a pair of TNT2s running in parallel.

NVIDIA have come to terms with the fact that many users of their cards want to see how much faster than stock speed they can run them, and now include a lightly hidden overclocking mode in the standard drivers. You used to have to use a program like TNTClk to pump up your TNT-series card; now you can do it by changing one Registry key, which can easily be done by installing a little patch like this one.

This allows you to go to the GeForce 256 tab in Display Properties, click the Additional Properties... button, and select the Hardware options tab. You have to restart once after turning on the overclocking feature; then, you can easily twiddle the card's clocks up and down.

Both the ASUS and Leadtek standard driver sets include overclocking utilities as well, but the V6600 Deluxe goes further.

Because the Deluxe has on-board temperature monitoring, you can use the included ASUS SmartDoctor program to automatically clock the card back when it's too hot. When SmartDoctor's auto-cooling is activated no other overclocking options will work, and I couldn't tell exactly what speed the card was running at when it was cool. It seemed about the same speed with SmartDoctor activated as when I'd manually overclocked it to 140MHz core speed, 195MHz RAM. SmartDoctor's constant monitoring of the chip voltage and fan speed is supposed to cause a slight performance hit, but I couldn't detect any speed loss. Some early ASUS hardware monitor software was appallingly bad in this regard (forget slowing the machine down, it just crashed it!), but SmartDoctor seems well written and stable.

SmartDoctor also monitors your AGP power supply and I/O (VDDQ) voltages. If the AGP voltages are too low (they should each be not much less than 3.3 volts), it probably means your motherboard voltage regulator isn't up to the task of powering a high-drain board like the GeForce. The monitor isn't actually a very useful feature, though, because the GeForce only draws its big fat maximum current when it's in 3D mode; if you're looking at the desktop to view the voltage monitor, the 3D sections of the GeForce chip are standing idle, and the AGP voltage is probably fine.

SmartDoctor can also do software CPU cooling, which Linux and Windows NT do natively but which Windows 95 and 98 don't. This puts the CPU into standby mode when it's idle, allowing it to cool off (and draw less power, which is significant for laptop users but unimportant for desktop machines). There are other Win95/98 programs out there like Waterfall that do the same thing, and how useful they all are is questionable. Sure, they'll let your processor cool off when it's not doing anything, but the CPU will be working hard non-stop if you start playing games; wouldn't you rather know that your CPU is able to overheat itself before you get embroiled in hot deathmatch action for 20 minutes?

The plain V6600 doesn't support the fancy SmartDoctor features, so it's manual overclocking or nothing. I found it was happy, in rather warm Australian weather, at 135MHz core, 190MHz RAM. Mind you, this was in my ridiculously well-cooled case. The SDR Leadtek card seemed OK at 150MHz core speed (the fastest the stock drivers let you set the core), but it didn't stay that way; no more than 140MHz seemed stable, and that was with the lid off the test computer and a desk fan blowing on the card. RAM overclockability wasn't any better with the Leadtek, either, which was odd; it has 5nS RAM versus the 5.5nS on the non-Deluxe ASUS board. 190MHz seemed to be the ceiling, though; 200MHz hung instantly. 195MHz or something might be possible, but chasing that last few megahertz is silly; it's worth practically nothing, performance-wise, and if you run your card right on the ragged edge, the first hot day to come along will give you a free ticket to crashland. The DDR Leadtek has the same cooler as the SDR one, so its core speed can't be wound any higher.

Manually overclocking the V6600 Deluxe didn't give much better results than the plain V6600.

The Deluxe uses 5nS SGRAM versus the 5.5nS SDRAM on the plain V6600, and this allows ludicrous RAM overclockability if you use a program like TNTClk that supports it (the standard overclocker tops out at 195MHz RAM speed).

Cranking the heck out of the RAM, though, achieves little if you can't wind up the core speed, too. This is true of the surprisingly RAM-overclockable Butterfly TNT2 Model 64 card (reviewed here), and it's true of the V6600 Deluxe, too.

I couldn't get the Deluxe's core speed higher than 140MHz and retain stability, although this review at Overclockers.com.au reports success with 160MHz core, which along with the 210MHz RAM speed they managed makes for roughly 10% better performance than I achieved. They were testing a pre-release board, though, while I checked out the full retail package; this could account for the difference, or it could just be the normal variability between cards.

Overall, the Leadteks seem no more more overclockable than the plain ASUS board, and the ASUS V6600 Deluxe card, at least the one I tried, is only trivially better. But you can wring better than a 10% margin over stock speed out of any of them.

10%, by the way, is the generally accepted threshold below which a performance increase isn't actually noticeable - see the blue bar too the right. If there's no loss of reliability (which is hard to judge on the crash-prone high performance Windows 95/98 systems most gamers run) then hey, add 5%, maybe you'll get two more frags per hour out of it. But if there's a lot of fiddling involved, or you have to spend money on a better heatsink for the card - stop and think about the return you're going to get. If it's under 10%, I believe your time is better spent playing more games, talking to a family member or, possibly, even going out in the sunlight for a little while.

Chip coolers

The plain V6600 is fitted with a low-profile chip cooler of the conventional thin-fan-on-a-thin-heatsink type. The Leadtek boards have the fan-inset-into-heatsink design instead. Both coolers are less than brilliant, but anything much fatter than the ASUS unit (which stands considerably taller than the Leadtek one) will obstruct the next slot along from the video card. Dedicated video card overclockers often forfeit their first PCI slot for some extra speed. Even as it stands, the plain V6600's cooler may foul unusually wide PCI cards in the adjacent slot. The Leadtek cooler should be fine in any configuration.

The V6600 Deluxe has another low-profile cooler, this time with funky low profile spiral fins on the heatsink that keep the whole assembly nice and skinny. The significant thing about it, though, isn't the heatsink design - it's the fan. This is a three-wire, speed-reporting fan, which in concert with a built-in temperature probe and the Winbond hardware monitor chip on the V6600 Deluxe lets the card keep track of its chip temperature and fan performance. It can sound an alarm if the fan speed drops, and it can also change the clock speed of the GeForce chip in response to its temperature. More on this in the overclocking section, below.

Making numbers

At the same clock speed, SDR GeForce cards perform identically. This is the case with all shared-chipset boards; assuming identical clock speeds, you'll get the same performance out of the most expensive big-brand card as you get from the cheapest and most cheerful Taiwanese knock-off. Of course, the big-brand card may have higher rated RAM and a better chip cooler and superior overall construction and so be able to run a bit faster. Since the ASUS and Leadtek boards seem pretty much identical as regards overclockability, I only ran benchmarks on the plain V6600, and then compared it with the DDR Leadtek.

I checked the V6600 out first against my old V770 TNT2, both driven by a 500MHz Celeron, which is pretty much exactly equal in speed to an identically clocked Pentium II.

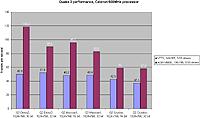

Far and away the most spectacular result came, unsurprisingly, from NVIDIA's TreeMark.

TreeMark is a benchmark specially made to demonstrate the GeForce's ability to speedily deal with scenes with lots and lots of polygons in them and dynamic lighting. And the GeForce trampled the V770 into the mud on this test, clocking in at about seven times the older card's speed for both the simple and complex versions of the test.

The results for other tests were less astounding, but still impressive. Quake 2 at 1024 by 768 is thoroughly playable on the TNT2, but the GeForce streaked away from it, especially when playing the standard demo2 demo, whose low complexity meant the CPU wasn't the limiting factor. Demo2 is representative of the performance you get in a single player game.

The more complex Massive1 and Crusher demos, of hectic multiplayer games, brought the GeForce's results down a bit, but it still spanked the V770 handily.

It didn't hang about in the simple WinTune 98 benchmark, either.

The 2D results are practically identical - any video card on the market today has 2D performance rather faster than any normal user needs - but 3D performance is respectably higher for the newer card. Incidentally, the GeForce supports resolutions up to a staggering 2048 by 1536 in 16 bit colour (and at 75Hz too - none of your crummy flickervision 60Hz modes), and can do 32 bit all the way to 1920 by 1440.

The 3D WinTune results put the GeForce comfortably ahead, but not amazingly so. This is not very surprising, since WinTune is not well suited to showing off the GeForce's abilities; its tests are quite simple. Then again, this does make WinTune much more representative of the results you'll see from many current games than the polygon-rich TreeMark.

It's been claimed that the 3.53 drivers aren't very well optimised for the TNT2, giving the GeForce an unfair advantage, but I noticed no great difference when I went back to the old v2.08 Detonator drivers, which are still used by many TNT and TNT2 owners who like their stability. You can try the experiment yourself, of course.

NVIDIA's page here lets you grab the latest version of the Detonator drivers, but they don't seem to archive the previous incarnations. Fortunately, plenty of other places do. You can get various older Detonator versions from here, for instance.

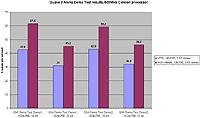

I also checked out the then freshly released Quake 3 Arena Demo Test on the GeForce.

I tried both of the included demos, in 1024 by 768 in 16 and 32 bit. They're reasonably hectic multiplayer action, though not real torture tests.

Q3 lets you use any combination of 16 or 32 bit textures with a 16 or 32 bit main video mode. This graph shows the results for 16 bit mode with 16 bit textures, and 32 bit mode with 32 bit textures. The results changed only slightly when I changed texture modes; if I had to choose, I'd pick 16 bit mode with 32 bit textures, for speed and prettiness, but in the heat of battle you really can't tell the difference unless you stop and stare at the sky, or pay unhealthy attention to the dithering of overlapping smoke effects.

32 bit textures didn't overflow the available memory of the V770 card and monstrously reduce performance, as happened in earlier test versions of Q3 when I used uncompressed 32 bit textures. The V770 actually gave a very good account of itself; at around 70% of the GeForce board's performance, it was clearly delivering more bang per buck.

DDR benchmarks

Time to wind up the new card.

This time, the test machine is a humble 433MHz Celeron. This means the test results will be considerably less impressive than the numbers you'd see from a hot current processor, but that's not important - the important issue with DDR is how it keeps the frame rate up as the resolution climbs.

No matter what resolution you run a 3D game in, if you're using a 3D accelerated video card, your CPU has to do exactly the same amount of work. It just feeds the geometry data to the video card. If things get slower as you raise the resolution, it's because the video card's having a hard time painting all of those pixels. So it doesn't matter very much, within reason, how fast or slow your CPU is, if you're just trying to see how much better one video card is at a given resolution than another. If the resolution is low enough that the CPU is the limiting factor then the results for the different cards will be much the same, but to get an idea of the difference you just have to wind the resolution up until the results separate.

To avoid blurring the lines, I ran both GeForce cards for this comparison at stock speed. I've previously established that you can wring another 10% or so out of them; big deal. Stock speed demonstrates the difference just fine.

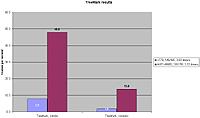

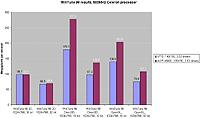

First, the WinTune numbers.

2D speed is functionally identical. Both cards scream. But get a load of the raw Direct3D and OpenGL results!

WinTune asks very little of the processor, geometry-wise, so faster video cards get to stretch their legs without waiting for the CPU. And the DDR conclusively smacks the SDR card into the middle of next week in such tests. 55% faster for Direct3D, 64% faster for OpenGL.

This represents the most you can expect to get out of the DDR board, compared with SDR. Games are a more complex load, and so the raw rendering difference gets leavened by other factors.

Time for Quake 3 Arena, the full version this time. I tried Q3A Demo 1 in 800 by 600, 1024 by 768, 1280 by 1024 and the previously ridiculous 1600 by 1200 resolution, in 16 and 32 bit. Every possible pretty-option was on; 32 bit textures, high object detail, lightmap lighting, maximum texture detail, and trilinear texture filtering. Sound was on, but set to low quality.

In the lower resolutions, both cards eat up data from the CPU as fast as it can dole it out; it's not until 1024 by 768 in 32 bit comes along that a significant gap opens up. As the resolution rises, the DDR board pulls ahead. Not miles ahead, but ahead. It's got about 25% on the SDR GeForce in 1600 by 1200.

The last result, 1600 by 1200 in 32 bit, overran the 32Mb of video memory on both boards when I had texture detail set to 32 bit. Q3A's textures aren't all that gigantic, but when you're running 1600 by 1200 in 32 bit, every single frame takes up about 5.5 megabytes of memory. Double buffer it and you're looking at 11Mb. Per frame. Textures eat another big chunk of memory, and then there's the non-trivial amount of RAM the video card has to use to actually do work in - the card has no on-board storage other than its video RAM, and it uses it as scratch space for its highly specialised processing. Hence, 1600 by 1200 with everything turned on needs more than 32Mb of RAM. The video card makes space by not keeping the full set of textures it needs in its on-board storage.

This results in utterly miserable performance, below 5 frames per second, as the boards desperately flog textures into and out of main memory. Not a glimmer came there from the hard disk light; it was all AGP bus grinding. With an AGP 4X motherboard the results would no doubt have been less abysmal, but it still would have sucked.

Chopping back the texture detail to 16 bit pulled the total RAM needed back under 32Mb, and frame rates perked up again, but neither card was a terribly fast mover even then - the DDR card sweated its way to 17.4 frames per second overall, with troughs considerably lower.

Turning off some of the prettiness made a big difference, though. I went on to select minimum texture detail (making all of the surfaces look blurry). The DDR board now managed 43.4 fps for 16 bit and 35.1 fps for 32 bit in 1280 by 1024 - quite playable. 1600 by 1200 gave 36.1 and 23.9 fps, respectively.

Then I tried maximum ugliness. 16 bit depth, 16 bit textures, bilinear filtering, low object detail and minimum texture detail. I also selected vertex lighting, which utterly destroys the groovy Gothic ambience of Quake 3 but also makes the game substantially faster, and furthermore makes it a lot easier to see your enemies. These are all the settings you should pick if you're in it to get maximum frame rate from a given resolution, and don't give much of a damn about the looks of the thing.

The result?

42fps.

42 frames per second. From a lousy 433MHz Celeron. In, I remind you, 1600 by 1200. That's more than eighty million actual genuine you're-playing-it game pixels painted per second, kids. It's less than the local Frag God probably wants to see, but it's not freakin' bad for a CPU that practically comes free in Corn Flakes boxes.

If you're a deathmatcher that wants Ludicrous Res for better railgun aiming at distant targets, then you will not need a godlike CPU to make use of a DDR GeForce. A bit more grunt than this lousy Celeron would be nice, but you don't need a liquid-cooled 1GHz wank-o-PC. Wind back the detail, crank up the res, and enjoy.

And regular folks who want to play single player or more relaxed multiplayer 3D games should care about DDR, too; it'll let you step your resolution up one or two levels from whatever's fast enough for you on an SDR GeForce, without losing any speed. 1600 by 1200 is no longer that stupid resolution you pick to see just how low a benchmark result you can get out of your computer; if your monitor can handle it, a DDR GeForce lets you go for it.

Incidentally, if you'd like to read a lavishly illustrated treatise on the subject of Quake 3 tweaking, check out Mike Chambers' Quake 3 Arena Tune-Up Guide on nV News, here.

Benchmarketing and innumeracy

A lot of computer users, particularly overclockers, display a strange kind of innumeracy when it comes to interpreting hardware performance statistics. To be able to tell what's really faster, you need to understand measurement error, and have a sense of proportion.

Error is how much the results generated by a given system running a given benchmark can vary from test to test. It's the inherent inaccuracy of each test. Benchmarks that automatically run several times and average out the result tend to have a very small error, but 3D game frame rate tests often have error margins of around one per cent. Ergo, if you run the same frame rate test on two different set-ups and one scores one per cent faster than the other, there's no reason to believe it's actually faster at all. Maybe it just made a high number for this run, and the other one made a low one. You have to run multiple tests and average the results - NOT just pick the fastest one, as less scrupulous overclockers might be tempted to do - to be sure.

The sense of proportion comes into play in interpreting the results. Let's say you absolutely definitely get 98 frames per second on a given test, averaged out over ten repeats of the test, and someone else testing just as carefully gets 94 frames per second. Wowie! You're four FPS faster! Call the papers!

Well, big, fat, hairy deal.

You've got a 4% advantage. If you sat people in front of either machine and didn't tell them which was meant to be faster, they wouldn't be able to tell. Maybe, overall, people would get slightly more frags on the faster box. But if you think they'd get 4% more frags, you'd probably be wrong.

If, on the other hand, someone has a less exciting machine that does 20FPS, and does something to it that makes it do 24FPS, the change is more drastic. Now we're talking about a 20% improvement. This difference would be noticeable, and thoroughly worthwhile.

It's the proportion that matters, not the after-minus-before difference.

This becomes more significant when you consider than quite a few overclockers spend a remarkable amount of time and, often, money, chasing that last few per cent. The box I'm typing this on has a 400MHz Celeron in it, chugging along happily at 500MHz. The case is well cooled (very well, in fact - see how here), but the Celeron has the stock, not at all exciting Intel Socket 370 CPU cooler on it. It's probably the cooler that's holding it back; with a better cooler I could goose the thing up to 550MHz.

But 550MHz is only 10% faster than 500MHz. Sure, it's 50 whole megahertz faster, and if it were possible to run a P-II class processor like the Celeron at a mere 50MHz then it'd still have around twice the CPU grunt of the 40MHz 68030 Amiga I used to be so proud of, but that's immaterial. 10% is 10%, and it's a barely noticeable improvement.

Many overclockers work hard to get these slight incremental improvements. A relatively cheap Peltier cooler, or a big fat passive one, will get most C-400s reliably to 550MHz. But with a water jacket or vapour phase cooling you might be able to hit 616MHz!

Well, that's a whole lot of time, effort and money for another 12%, isn't it?

Maximally overclocked computers are also frequently irritatingly unstable, usually for thermal reasons. My wind tunnel PC will fall on its face after maybe ten minutes of up-time in summer if I forget to turn on the main fan. So overclockers often have to settle for a speed a notch or three slower than the peak their bodacious cooling systems can manage, so as to avoid crashes whenever the sun shines.

All this makes me rather glad that video cards like the V6600 Deluxe have come along. Sure, you can probably wind it up a bit further with manual tweaking than its management software will do automatically. But the difference isn't much, and it won't crash on a hot day - it'll just slow itself down, sensibly.

Future options

The GeForce is the first of a new wave of ultra-3D cards, which will continue with S3's recently announced Savage 2000 chipset (overall a little slower than the GeForce), and 3D pioneer 3dfx's upcoming Voodoo 4 and 5. All of them will, with appropriate games, deliver spectacularly better performance, justifying their substantially higher prices, and the V4 and V5 will have alternative power supply arrangements to take the load off the AGP bus and so should be more generally compatible.

But cards this powerful are still, at the moment, overkill. Glorious overkill, perhaps, but still overkill.

Six months is a long time in computing; by half way through 2000, there will be plenty of games around that can make use of a GeForce's special talents, and the boards themselves will be a good deal cheaper. And DDR boards will be more common and cheaper, as will the big fat processors they like to have driving them.

To buy or not to buy...

If you've got a decent 3D card at the moment (a TNT or TNT2, or a Voodoo 3, for instance) and you're considering upgrading, I recommend you hold off until the games catch up with the hardware. If you've got a graphics card with lousy-to-no 3D capability, though, a GeForce bought today will not fall far behind the pack for quite a while. It's not the greatest of bargains, but neither is it ludicrously expensive - well, as long as you don't buy the V6600 Deluxe, whose super-features are no use for most people.

Between the plain V6600 and the SDR WinFast, I preferred the Leadtek board originally because it was a bit cheaper, and came with TV out and DVD player software. It's lost its price advantage now, but the other ones still stand. If you just want a board to use with a DVD-ROM drive for movies, you needn't get a GeForce; there are lots of cheaper cards that can do the job just as well. But it's a nice addition to an already great card. The Leadtek software bundle is otherwise not very exciting, but aside from the nifty demos and a couple of pretty old games the ASUS bundle with the plain V6600 has nothing going for it, either.

The DDR Leadtek gives you 25% more performance, depending on your processor speed, game software and monitor capabilities, for the 20% more money it costs you. Not a bad deal, but only if high resolutions and 32 bit colour are important to you.

Even for the basic Leadtek card, though, you're still talking a substantial chunk of change. For much the same money you could get a perfectly good 16Mb TNT2 and an 8Gb hard drive, or a Voodoo 3 2000 and another 64Mb of memory, or a 32Mb Matrox G400 and go home with plenty of change in your pocket. And don't even start about the V6600 Deluxe; all the bells and whistles it may have, but they don't come for free!

So if you're looking to spend well under $AU500 on a new 3D card, you'll either have to wait a while or settle for a nice little TNT2 or similar older card. As the Quake 3 results above show, a TNT2 may be only half the price of an SDR GeForce, but it sure isn't only half the speed.

If money is no object and/or you've got to be the first on your block, get a GeForce now. The V6600 is a perfectly good one; the plain Leadtek is a little better overall, the DDR Leadtek is the speed king, and the V6600 Deluxe is great if you can use its extra features, don't want to muck about with manual overclocking, and can afford it. If the better game bundle with the more expensive brand name GeForce cards appeals to you then get one of them instead, but you lose nothing performance-wise with the cheaper ones.

If you can stand to wait, though, do.

Pros: |

Cons: |

|

|